What We Learned From 12 Months of OLXs (in 2016)

December 21, 2016

Product development isn’t the only thing we do at Learning Pool (formerly HT2 Labs) – in fact, a large proportion of our time is spent on researching how people learn in today’s world, and what we can do to help make the experience a better one.

I recently delivered a well-received session at the 2016 DevLearn Conference on my experiences of using Massive Open Online Courses (OLXs) for the past 12-months. (View the session slides on Slideshare).

In this post I’ll share some of the questions we asked and the findings that we made this year, as well as sharing a few tips on how you might apply them next time you’re designing social learning experiences.

But first, to help set some context about what I do and where the data that I’m going to refer to in this session has come from, let me give you a bit of background…

OLX Research & Development

Part of my role at Learning Pool (formerly HT2 Labs) is to design, build and facilitate free online courses/programmes/OLXs etc that we then offer to anybody, anywhere, for free.

We do this to help move the industry forward in terms of increasing knowledge and practice as well of course, to help people see, use and hopefully enjoy using platform (and then of course, hopefully buy it!)

The OLXs that I’ve designed and facilitated since September 2015 (so approx 15 months at the time of writing) have ranged from:

- One that only lasted 48 hours (which we undertook to see what happened when we ran a very short, sharp, focused, ‘scarce’ OLX)

- One that lasted a whole year

- Several that are facilitated

- Several that are unfacilitated

Another reason that we conduct these OLXs is to act as a testing ground so that we can continually refine our products, use of those products and of course share that insight with our clients, partners and of course people like yourselves.

Participants on these OLXs come from across the globe and if we could just attract a participant from Antarctica then we’d have every continent ‘ticked off’!

So, without further ado, here’s some of the things that we’ve learned this year…

How Quickly Can a OLX be Created Using Curated Content?

In the Spring of 2016 I designed and facilitated a OLX that would only last 48hrs. (This OLX is no longer available, but you can still catch up on what we learned in our hangouts with Dr Will Thalheimer and Julie Dirksen.)

Every single piece of content (with the exception of an introductory talking head video) was curated from the internet, resulting in the total amount of contact time when designing this course being in the region of ½ a working day, 3-4 hours.

Had this OLX consisted of a greater amount of bespoke content, then it would have taken days or weeks to build. Using a platform such as Stream LXP (formerly Curatr) that allows you to quickly build courses using curated content is a huge time and money saver.

What can you take from this?

Perhaps the next time you’re hit with a fast turn-around (and after determining that it’s the right solution) you could turn to pre-existing content as opposed to an authoring tool.

How Does a Facilitated Course Fare Against a Non-Facilitated Course?

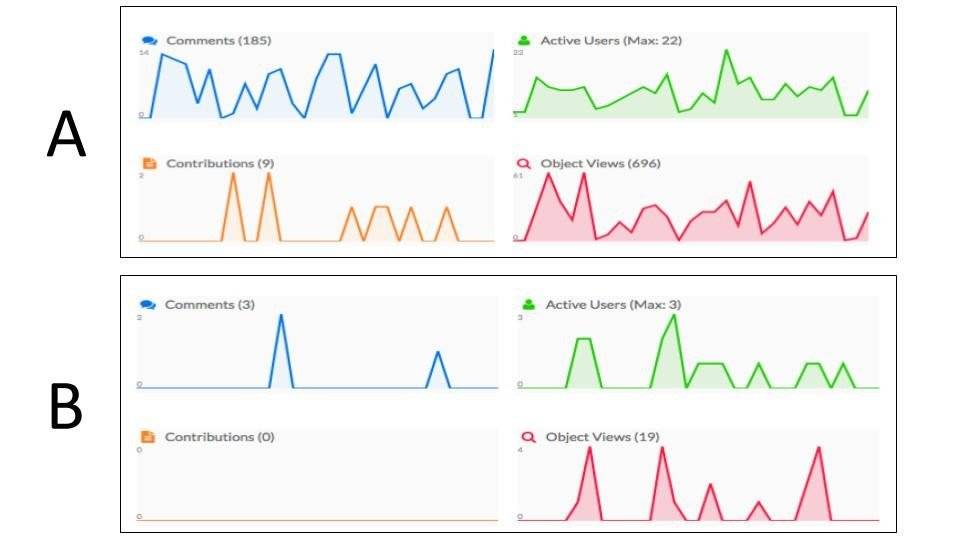

The screenshots on this slide were taken from 2 different OLXs (in September 2016):

- OLX A = Elearning Beyond The ‘Next’ Button (facilitated)

- OLX B = Exploring Social Learning (un-facilitated)

Look at the increased range of activity in OLX A compared to OLX B…

The increase in activity cannot be out down to a greater number of participants as (as of 10 September 2016),

OLX A had 556 participants, and OLX B had twice as many, with 1167 participants.

We can therefore deduce that facilitated courses significantly help with a range of engagement measures including logging in, viewing material, social interaction and user generated material being uploaded.

What can you take from this?

The next time you receive some resistance around designing a facilitated online activity, perhaps you’ll feel more confident about pushing back. Or, if you’re already running facilitated and unfacilitated courses, why not take a closer look at how each of them are behaving?

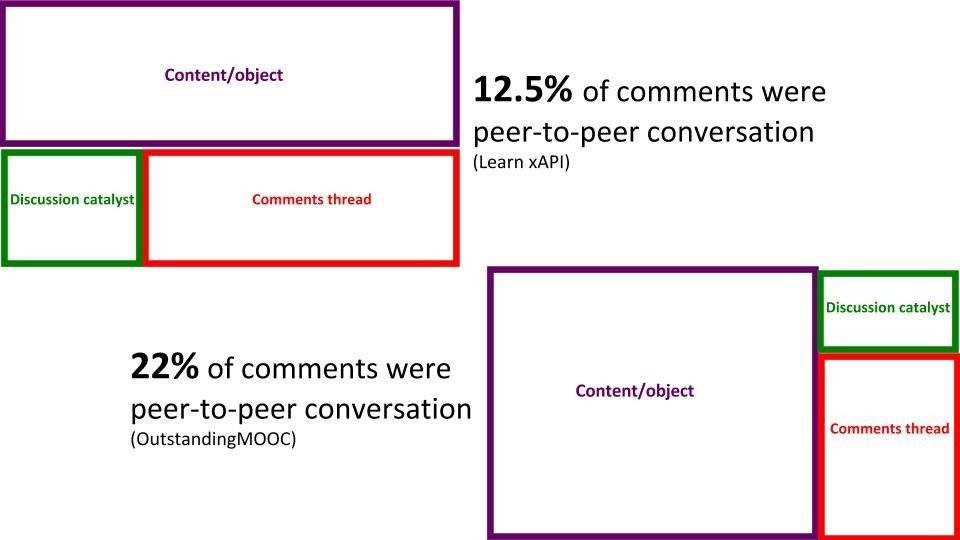

How Does The Placement of Social Features Influence Conversations?

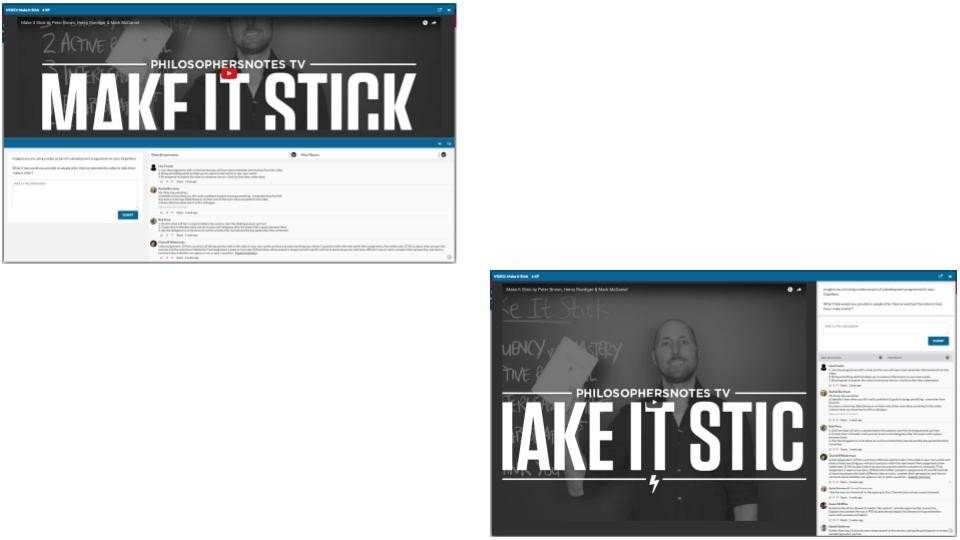

These particular screenshots were taken from the Elearning Beyond The ‘Next’ Button OLX, of a video object entitled ‘Make It Stick’, which formed part of the Spaced Learning level.

The 2 different layouts show the use of options that are possible in Stream LXP (formerly Curatr). But let’s strip out the specific content and look at the layouts themselves…

The difference in layouts led to an approximate 10% increase in peer-to-peer conversation taking place.

NOTE: These were 2 different courses (as indicated on the slide itself), which took place at different times, with different audiences, so this wasn’t a true A/B test; however this behavior has been noted in other OLXs that we have conducted.

What can you take from this?

Perhaps if some of your tools/platforms allow you to modify the layout/User Interface etc you can experiment yourself to see how you might be able to improve aspects of it?

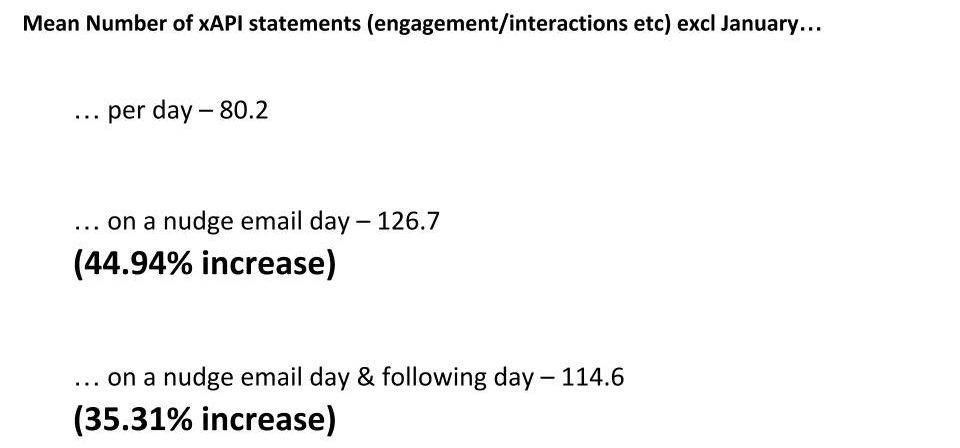

What Is The Benefit of Adopting a ‘Nudging’ Approach to Facilitation?

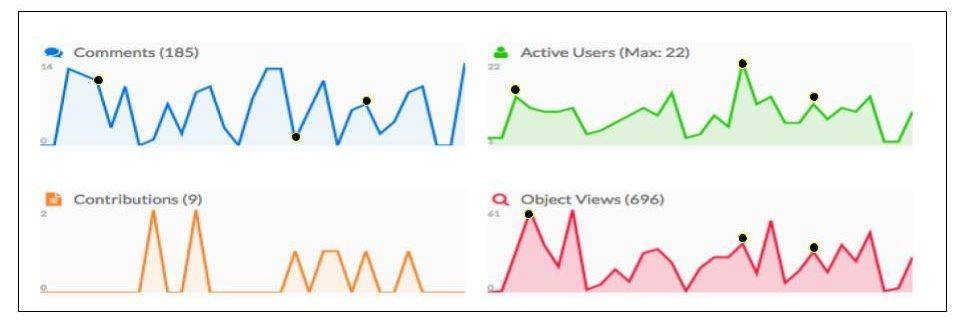

This screenshot was taken from the ‘Activity Graphs’ section of the Elearning Beyond The ‘Next’ Button OLX (in September 2016).

The right hand edge on each graph indicates the date [that the images were captured] and the data shows a rolling 30 day period, so the left hand point of each graph is approximately September 10, 2016.

This particular OLX has given us some good opportunities for research as it took place over a 12-month period, and we used it to look at how participants respond to the ‘nudge emails’ that we send out from the back-end of Stream LXP (formerly Curatr).

These nudge emails were sent once a week, every week (3 times a week for the first 2 weeks) for the entire year and adopted a ‘rolling’ schedule throughout the days of the week. This week Monday, next week Tuesday, the week after Wednesday etc (Saturday and Sunday were not included).

The black dots on 3 of these graphs (above) indicate a day when an email nudge was sent.

What does it show us? Well, the results are somewhat mixed…

Take a look at those increases in xAPI statements (aka visible signs of engagement) in the course.

If you compare some of the nudge email data points, there appears to be a correlation between a nudge email being sent and activity within the OLX. However, there are also several occasions when the sending of a nudge email did not appear to have an impact and indeed some activity spikes on the graphs do not appear to be as a result of a nudge email.

What can you take from this?

Can you afford not to try and increase engagement with your material by those percentages?

Do your own experiments: What days work best for your people? Think about selling cycles, team meetings, deliveries, stock replenishment etc. It may not be the same for every person/region/department.

How Can I Apply Simple Gamification Techniques to Increase Engagement?

When considering using Gamification, be aware that it can be different to creating a ‘game’ – very different!

In our OLXs we used subtle gamification techniques to reward the behaviours that we wanted participants to demonstrate, these are:

- Viewing content

- Commenting

- Making quality comments

- Contributing content back into the OLX

For us, the gamification aspect and leaderboards are very much secondary to the development activity itself.

What can you take from this?

When designing courses, what activities do you ask people to undertake, that encourage the behaviours you expect to see? Would gamifying those activities help to encourage those behaviours?

What Could You Do in 2017?

This post provides just a snapshot of the things that you can do and learn using OLXs and some basic analytics. At Learning Pool (formerly HT2 Labs), we’re constantly researching and developing new methods and technologies to help organisations get more from their learning investments.

Head over to our Case Study pages to gain inspiration from how we’re helping customers succeed in this area, or if you want to find out more about what OLXs can do for you, check out our Solutions pages.

Got a learning problem to solve?

Get in touch to discover how we can help